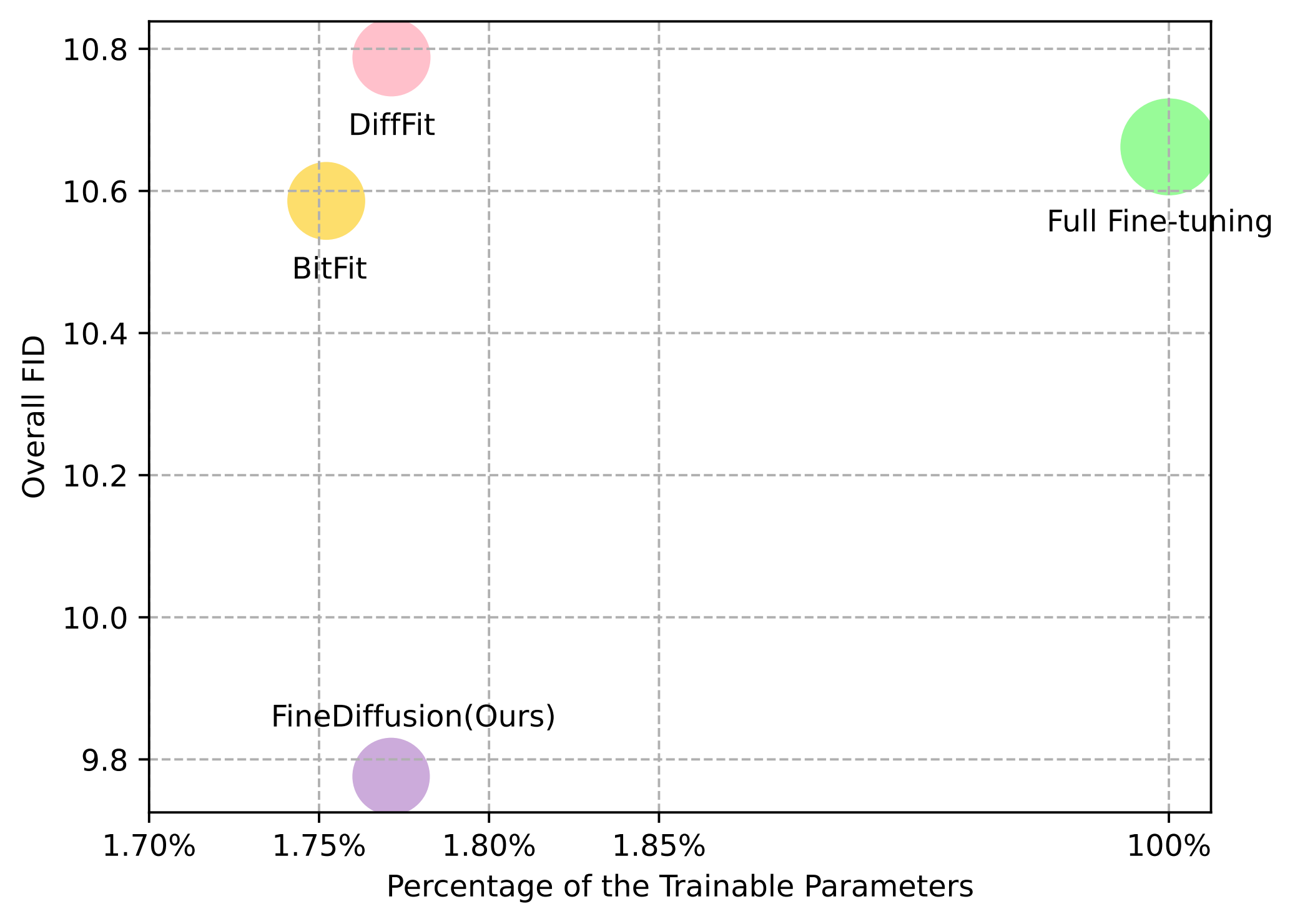

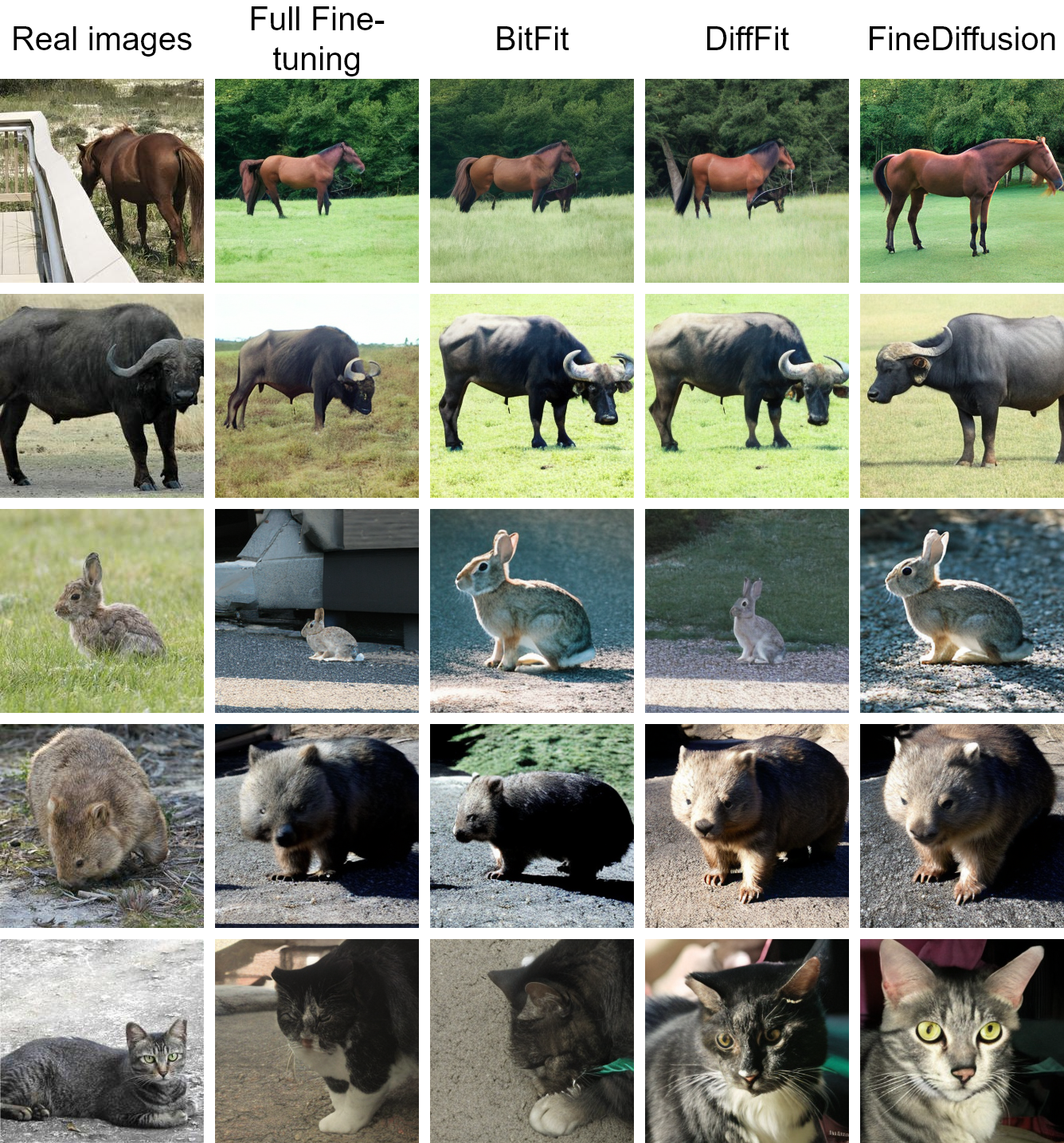

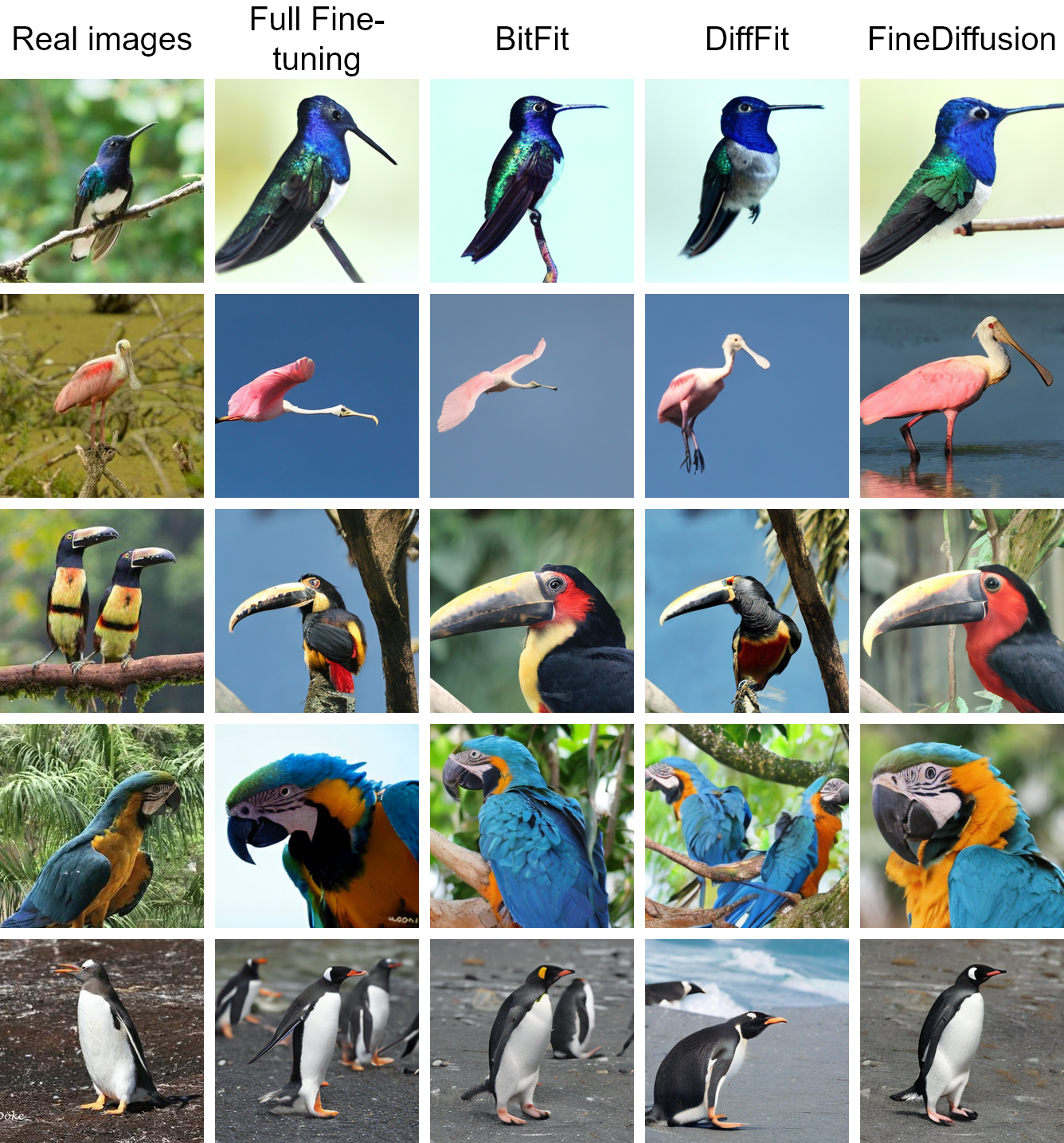

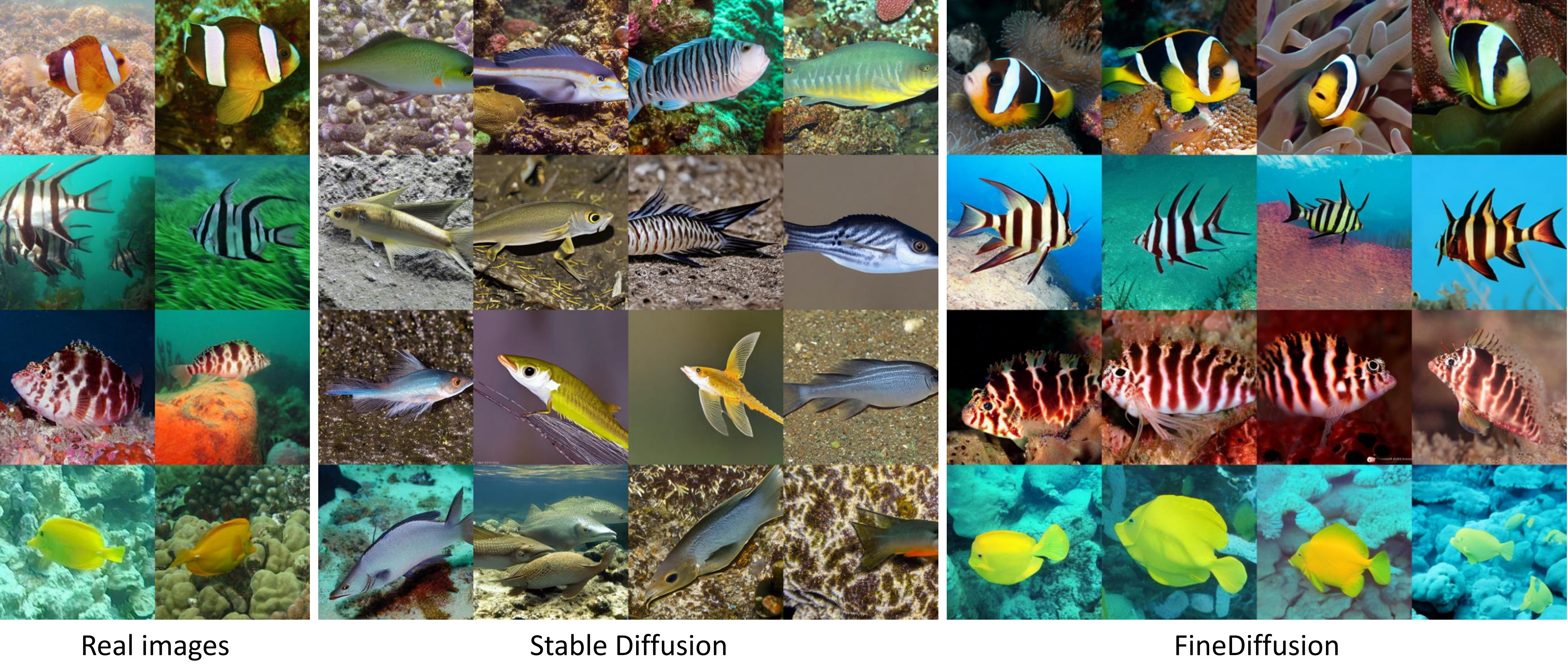

The class-conditional image generation based on diffusion models is renowned for generating high-quality and diverse images. However, most prior efforts focus on generating images for general categories, e.g., 1000 classes in ImageNet-1k. A more challenging task, large-scale fine-grained image generation, remains the boundary to explore. In this work, we present a parameter-efficient strategy, called FineDiffusion, to fine-tune large pre-trained diffusion models scaling to large-scale fine-grained image generation with 10,000 categories. FineDiffusion significantly accelerates training and reduces storage overhead by only finetuning tiered class embedder, bias terms, and normalization layers' parameters. To further improve the image generation quality of fine-grained categories, we propose a novel sampling method for fine-grained image generation, which utilizes superclass-conditioned guidance, specifically tailored for fine-grained categories, to replace the conventional classifier-free guidance sampling. Compared to full fine-tuning, FineDiffusion achieves a remarkable 1.56x training speed-up and requires storing merely 1.77% of the total model parameters, while achieving state-of-the-art FID of 9.776 on image generation of 10,000 classes. Extensive qualitative and quantitative experiments demonstrate the superiority of our method compared to other parameter-efficient fine-tuning methods.

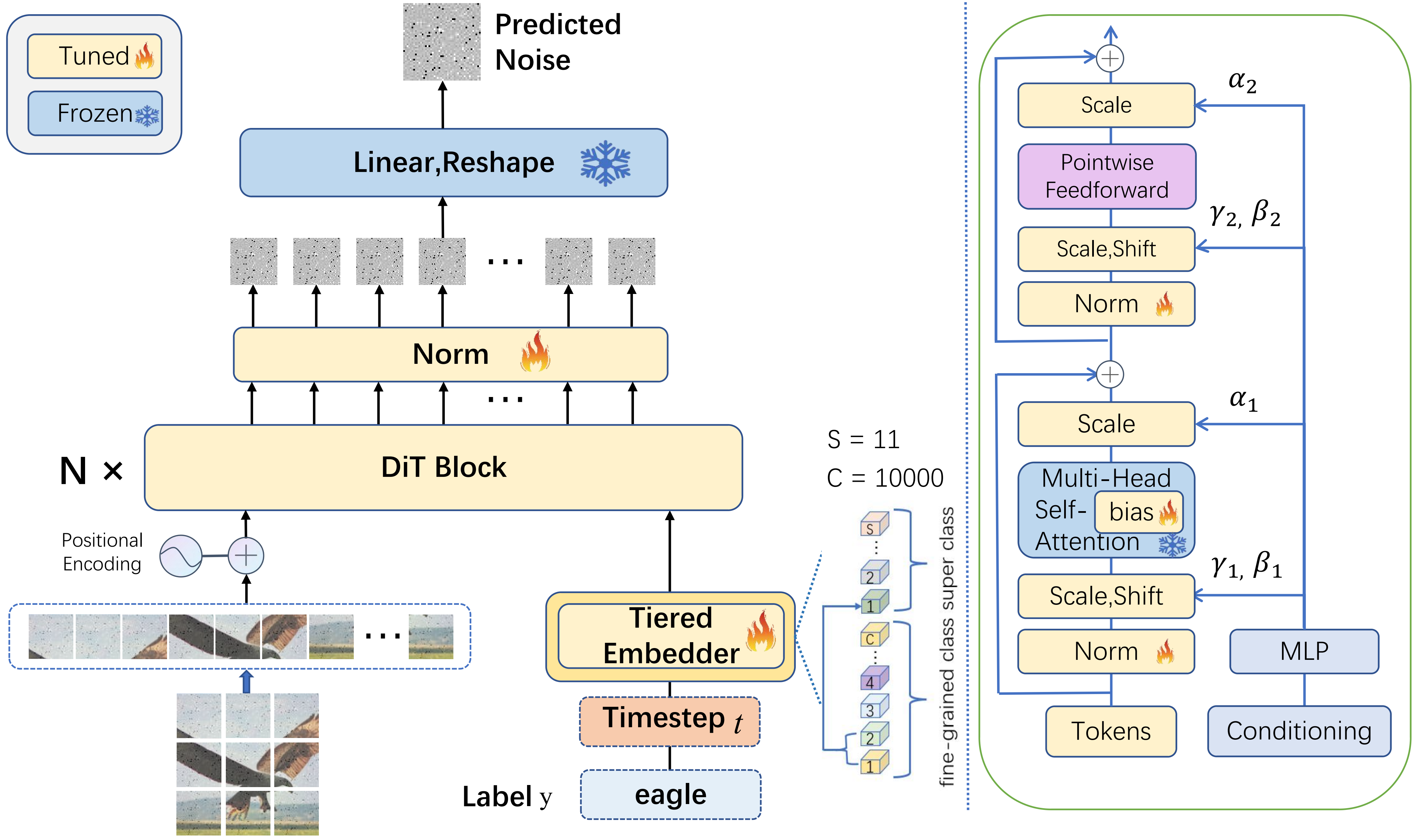

We introduce a novel parameter-efficient fine-tune method called FineDiffusion for fine-grained image generation. We first propose a hierarchical class embedder called TieredEmbedder, which models the data distribution of both superclass and subclass samples.

By only finetuning proposed TieredEmbedder, bias terms, and the scaling and offset of normalization layers, our method significantly speeds up training while reducing model storage overhead.

@article{pan2024finediffusion,

title={FineDiffusion: Scaling up Diffusion Models for Fine-grained Image Generation with 10,000 Classes},

author={Pan, Ziying and Wang, Kun and Li, Gang and He, Feihong and Li, Xiwang and Lai, Yongxuan},

journal={arXiv preprint arXiv:2402.18331},

year={2024}

}